Amazon Web Services (AWS) has unveiled its latest AI hardware—a supercomputer powered by Trainium2 chips. These AI chips, developed by Amazon, are designed to take on Nvidia, the current leader in the AI chip market.

Apple Joins as a Customer

AWS announced that Apple will use Trainium2 chips, making them one of the early adopters. In addition, AI startup Anthropic will be the first to utilize the supercomputer, which is built using hundreds of thousands of these new chips.

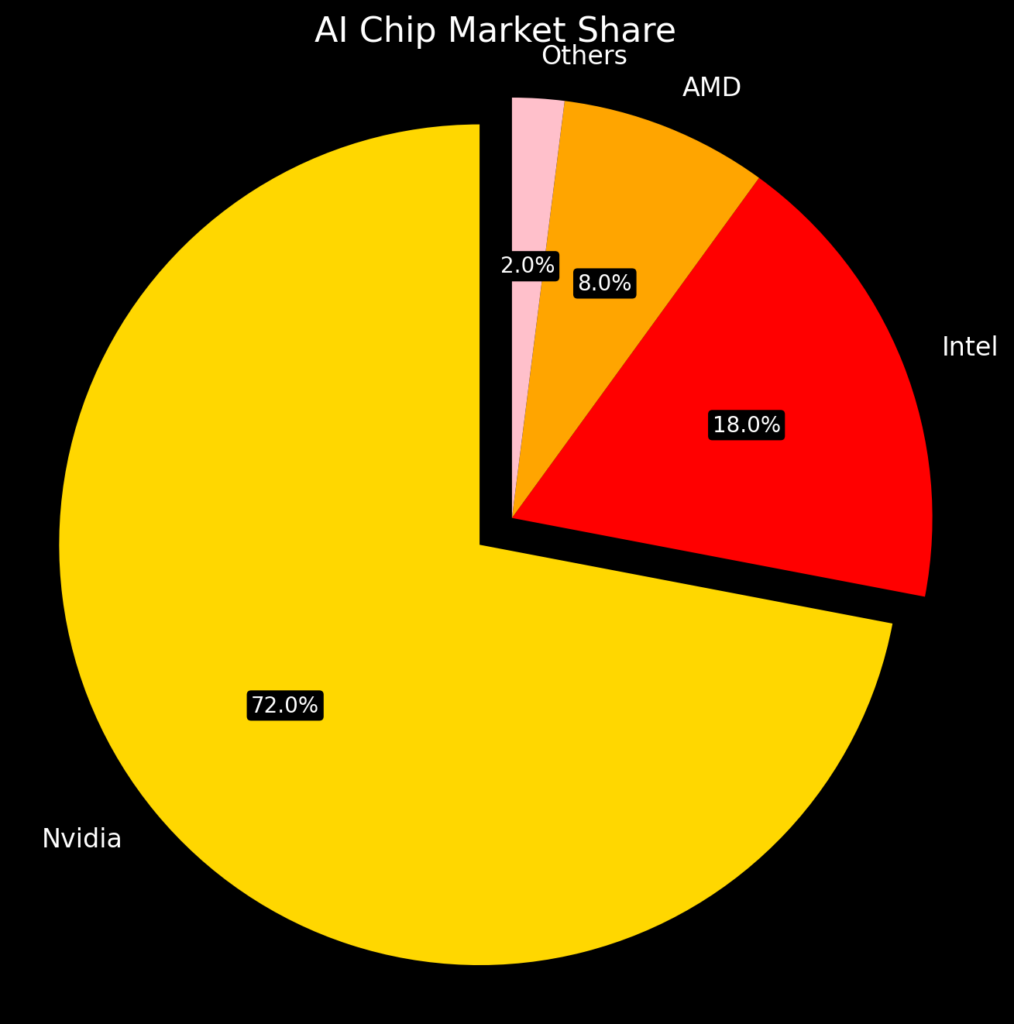

Challenging Nvidia’s Hold on the Market

While Nvidia dominates with over 70% of the AI chip market, companies like Amazon, Meta, Google, and Microsoft are starting to develop their own custom chips. These chips not only support internal operations but are also sold to customers, creating competition for Nvidia.

AWS says its Trn2 UltraServers, equipped with Trainium2 chips, are faster and cheaper than Nvidia’s current offerings. For some AI models, the new servers can lower costs by up to 40%, according to AWS executives.

What’s Next for AWS?

AWS plans to release a newer version, Trainium3 chips, next year. These chips will power future AI systems and enhance AWS’s ability to connect a larger number of chips compared to Nvidia’s technology.

Availability and Supply

AWS plans to make the new servers and supercomputer available in 2025. While Nvidia has faced delays due to supply chain issues, AWS claims its supply chain is solid, though it relies exclusively on Taiwan Semiconductor Manufacturing to produce Trainium chips.

This move marks a significant step for AWS in the competitive AI hardware market, offering businesses more options for advanced AI solutions.

Current Competition

Nvidia:

- Dominates the data-center AI chip market (~65% market share as of 2023).

- Offers high-performance GPUs like A100 and H100.

- Known for substantial markups; production cost ~$1,000, final product priced up to $30,000.

AMD:

- Competes with the MI300 series targeting AI applications (~11% market share in 2023).

- Focuses on competitive pricing; specific markups not disclosed.

Intel:

- Provides Gaudi series for AI in data centers (~22% market share in 2023).

- Gaudi 3 priced at ~$125,000, significantly lower than Nvidia’s comparable products.

AWS (Amazon Web Services):

- Develops Trainium and Inferentia chips for internal and external use.

- Claims up to 40% cost savings compared to Nvidia GPUs.

- Specific market share data not available.

Google:

- Produces custom Tensor Processing Units (TPUs) for AI workloads.

- Primarily for internal use and cloud services; pricing not publicly disclosed.

- Market share information unavailable.

Cerebras Systems:

- Offers Wafer-Scale Engine (WSE) for high-performance AI tasks.

- Targets specialized applications with premium pricing.

- Market share data not available.

Graphcore:

- Develops Intelligence Processing Units (IPUs) for AI processing.

- Pricing details not disclosed but aims to be competitive.

- Market share data not specified.

IBM:

- Introduces AIU chips integrated into broader IBM systems.

- Pricing bundled with IBM services; specifics not disclosed.

- Market share data unavailable.

Qualcomm:

- Focuses on AI chips for mobile and edge devices (e.g., Snapdragon series).

- Offers cost-effective solutions for mobile markets.

- Market share information not available.

Apple:

Market share data not specified.

Designs M-series processors with integrated AI capabilities.

AI chips used exclusively in Apple products; pricing not individually disclosed.